Quick Answers

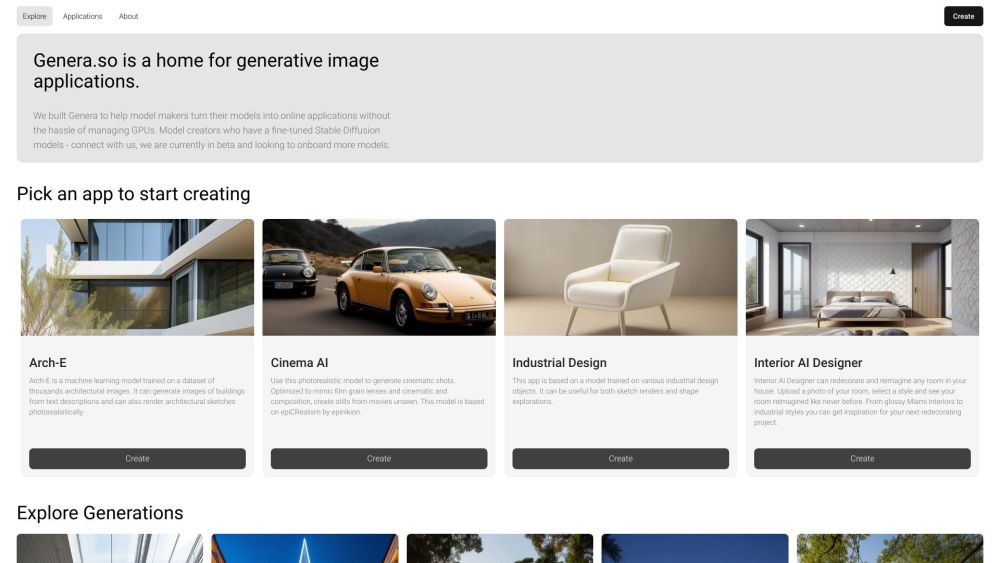

What exactly does “Launch Gen Image Apps in Minutes” mean?

It means transforming your local or Hugging Face-hosted image model into a fully functional, publicly accessible web application—with input controls, real-time generation, and download options—in under five minutes. No backend setup. No frontend coding.

Do I need to manage GPUs myself?

No. Genera.so dynamically allocates and scales GPU resources behind the scenes—optimized for latency, throughput, and cost. You focus on your model; we handle the hardware.

Which models are supported?

Any PyTorch-based image generation model—including LoRAs, T2I adapters, inpainting and outpainting pipelines, and multi-stage architectures—as long as it follows standard inference patterns and can be exported to TorchScript or ONNX.

Is framework compatibility guaranteed?

Yes. Genera.so natively supports models built in PyTorch and integrates seamlessly with Hugging Face Transformers, Diffusers, and Accelerate libraries. TensorFlow/Keras models are supported via conversion pathways.

Can I tweak the generated UI?

Absolutely. While the default interface is production-optimized and intuitive, Genera.so offers low-code customization: adjust sliders, rename parameters, reorder inputs, add tooltips, or embed branding—all through a visual editor.

Do I need to know HTML, CSS, or JavaScript?

No coding is required at any stage. The entire workflow—from upload to deployment—is guided, visual, and designed for technical users who prioritize model innovation over infrastructure engineering.